Outside of work, you may have heard the famous dictum “Correlation is not causation.” It may sound like a piece of theoretical fluff that, though involved in a recent Noble Prize in economics, isn’t relevant to your work as a demand planner. Is so, you may be only partially correct.

Extrapolative vs Causal Models

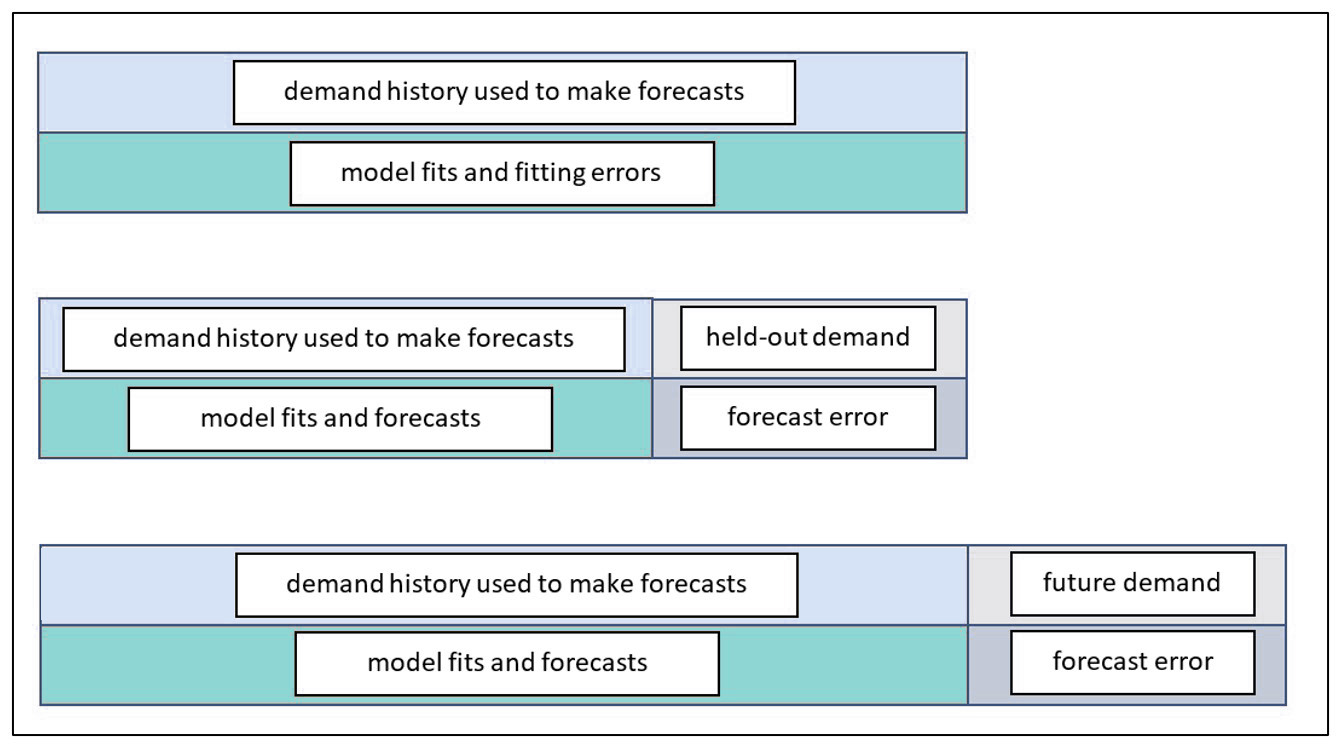

Most demand forecasting uses extrapolative models. Also called time-series models, these forecast demand using only the past values of an item’s demand. Plots of past values reveal trend and seasonality and volatility, so there is a lot they are good for. But there is another type of model – causal models —that can potentially improve forecast accuracy beyond what you can get from extrapolative models.

Causal models bring more input data to the forecasting task: information on presumed forecast “drivers” external to the demand history of an item. Examples of potentially useful causal factors include macroeconomic variables like the inflation rate, the rate of GDP growth, and raw material prices. Examples not tied to the national economy include industry-specific growth rates and your own and competitors’ ad spending. These variables are usually used as inputs to regression models, which are equations with demand as an output and causal variables as inputs.

Forecasting using Causal Models

Many firms have an S&OP process that involves a monthly review of statistical (extrapolative) forecasts in which management adjusts forecasts based on their judgement. Often this is an indirect and subjective way to work causal models into the process without doing the regression modeling.

To actually make a causal regression model, first you have to nominate a list of potentially-useful causal predictor variables. These may come from your subject matter expertise. For example, suppose you manufacture window glass. Much of your glass may end up in new homes and new office buildings. So, the number of new homes and offices being built are plausible predictor variables in a regression equation.

There is a complication here: if you are using the equation to predict something, you must first predict the predictors. For example, sales of glass next quarter may be strongly related to numbers of new homes and new office buildings next quarter. But how many new homes will there be next quarter? That’s its own forecasting problem. So, you have a potentially powerful forecasting model, but you have extra work to do to make it usable.

There is one way to simplify things: if the predictor variables are “lagged” versions of themselves. For example, the number of new building permits issued six months ago may be a good predictor of glass sales next month. You don’t have to predict the building permit data – you just have to look it up.

Is it a causal relationship or just a spurious correlation?

Causal models are the real deal: there is an actual mechanism that relates the predictor variable to the predicted variable. The example of predicting glass sales from building permits is an example.

A correlation relationship is more iffy. There is a statistical association that may or may not provide a solid basis for forecasting. For example, suppose you sell a product that happens to appeal most strongly to Dutch people but you don’t realize this. The Dutch are, on average, the tallest people in Europe. If your sales are increasing and the average height of Europeans is increasing, you might use that relationship to good effect. However, if the proportion of Dutch in the Euro zone is decreasing while the average height is increasing because the mix of men versus women is shifting toward men, what can go wrong? You will expect sales to increase because average height is increasing. But your sales are really mostly to the Dutch, and their relative share of the population is shrinking, so your sales are really going to decrease instead. In this case the association between sales and customer height is a spurious correlation.

How can you tell the difference between true and spurious relationships? The gold standard is to do a rigorous scientific experiment. But you are not likely to be in position to do that. Instead, you have to rely on your personal “mental model” of how your market works. If your hunches are right, then your potential causal models will correlate with demand and causal modeling will pay off for you, either to supplement extrapolative models or to replace them.