We recently met with the IT team at one of our customers to discuss data requirements and installation of our API based integration that would pull data from their on-premises installation of their ERP system. The IT manager and analyst both expressed significant concern about providing this data and seriously questioned why it needed to be provided at all. They even voiced concerns that their data might be resold to their competition. Their reaction was a big surprise to us. We wrote this blog with them in mind and to make it easier for others to communicate why certain data is necessary to support an effective demand planning process.

Please note that if you are a forecast analyst, demand planner, of supply chain professional then most of what you’ll read below will be obvious. But what this meeting taught me is that what is obvious to one group of specialists isn’t going to be obvious to another group of specialists in an entirely different field.

The Four main types of data that are needed are:

- Historical transactions, such as sales orders and shipments.

- Job usage transactions, such as what components are needed to produce finished goods

- Inventory Transfer transactions, such as what inventory was shipped from one location to another.

- Pricing, costs, and attributes, such as the unit cost paid to the supplier, the unit price paid by the customer, and various meta data like product family, class, etc.

Below is a brief explanation of why this data is needed to support a company’s implementation of demand planning software.

Transactional records of historical sales and shipments by customer

Think of what was drawn out of inventory as the “raw material” required by demand planning software. This can be what was sold to whom and when or what you shipped to whom and when. Or what raw materials or subassemblies were consumed in work orders and when. Or what is supplied to a satellite warehouse from a distribution center and when.

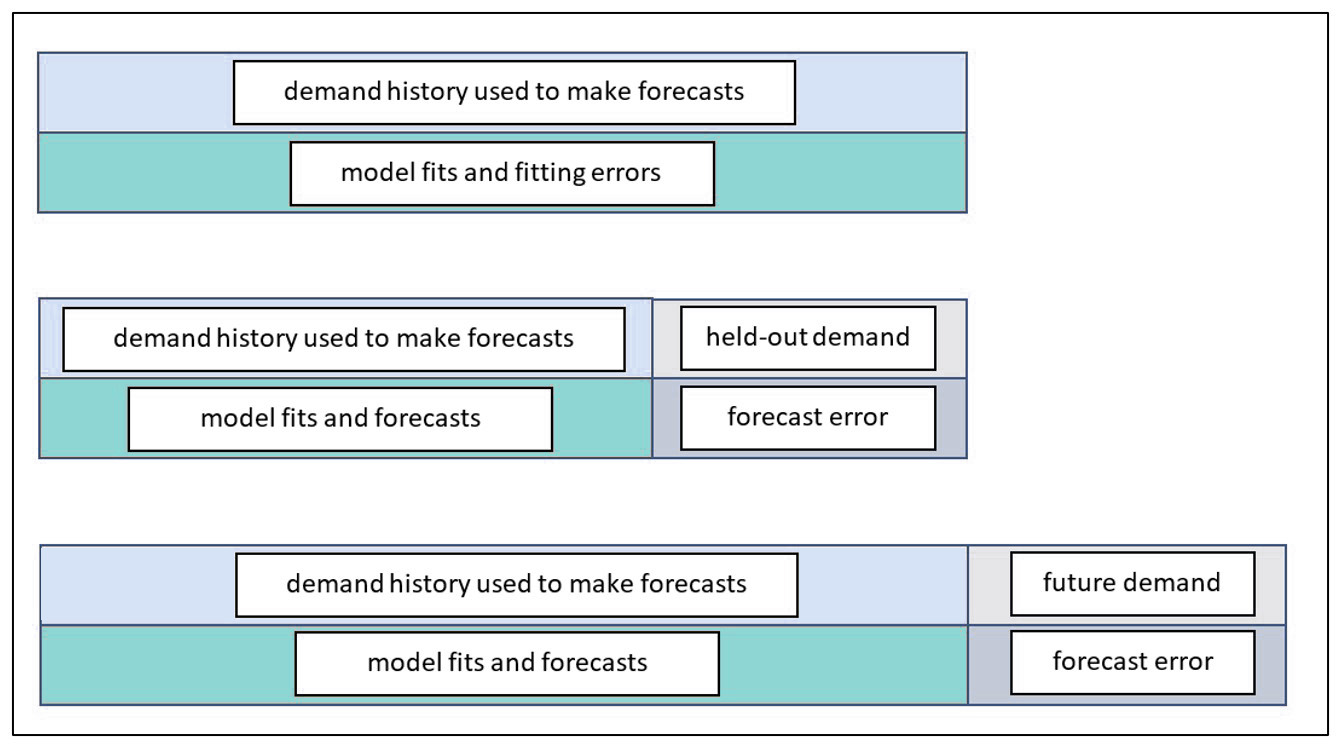

The history of these transactions is analyzed by the software and used to produce statistical forecasts that extrapolate observed patterns. The data is evaluated to uncover patterns such as trend, seasonality, cyclical patterns, and to identify potential outliers that require business attention. If this data is not generally accessible or updated in irregular intervals, then it is nearly impossible to create a good prediction of the future demand. Yes, you could use business knowledge or gut feel but that doesn’t scale and nearly always introduces bias into the forecast (i.e., consistently forecasting too high or too low).

Data is needed at the transactional level to support finer grained forecasting at the weekly or even daily levels. For example, as a business enters its busy season it may want to start forecasting weekly to better align production to demand. You can’t easily do that without having the transactional data in a well-structured data warehouse.

It might also be the case that certain types of transactions shouldn’t be included in demand data. This can happen when demand results from a steep discount or some other circumstance that the supply chain team knows will skew the results. If the data is provided in the aggregate, it is much harder to segregate these exceptions. At Smart Software, we call the process of figuring out which transactions (and associated transactional attributes) should be counted in the demand signal as “demand signal composition.” Having access to all the transactions enables a company to modify their demand signal as needed over time within the software. Only providing some of the data results in a far more rigid demand composition that can only be remedied with additional implementation work.

Pricing and Costs

The price you sold your products for and the cost you paid to procure them (or raw materials) is critical to being able to forecast in revenue or costs. An important part of the demand planning process is getting business knowledge from customers and sales teams. Sales teams tend to think of demand by customer or product category and speak in the language of dollars. So, it is important to express a forecast in dollars. The demand planning system cannot do that if the forecast is shown in units only.

Often, the demand forecast is used to drive or at least influence a larger planning & budgeting process and the key input to a budget is a forecast of revenue. When demand forecasts are used to support the S&OP process, the Demand Planning software should either average pricing across all transactions or apply “time-phased” conversions that consider the price sold at that time. Without the raw data on pricing and costs, the demand planning process can still function, but it will be severely impaired.

Product attributes, Customer Details, and Locations

Product attributes are needed so that forecasters can aggregate forecasts across different product families, groups, commodity codes, etc. It is helpful to know how many units and total projected dollarized demand for different categories. Often, business knowledge about what the demand might be in the future is not known at the product level but is known at the product family level, customer level, or regional level. With the addition of product attributes to your demand planning data feed, you can easily “roll up” forecasts from the item level to a family level. You can convert forecasts at these levels to dollars and better collaborate on how the forecast should be modified.

Once the knowledge is applied in the form of a forecast override, the software will automatically reconcile the change to all the individual items that comprise the group. This way, a forecast analyst doesn’t have to individually adjust every part. They can make a change at the aggregate level and let the demand planning software do the reconciliation for them.

Grouping for ease of analysis also applies to customer attributes, such as assigned salesperson or a customer’s preferred ship from location. And location attributes can be useful, such as assigned region. Sometimes attributes relate to a product and location combination, like preferred supplier or assigned planner, which can differ for the same product depending on warehouse.

A final note on confidentiality

Recall that our customer expressed concern that we might sell their data to a competitor. We would never do that. For decades, we have been using customer data for training purposes and for improving our products. We are scrupulous about safeguarding customer data and anonymizing anything that might be used, for instance, to illustrate a point in a blog post.