Here are examples of forecasting problems that SmartForecasts can solve, along with the kinds of business data representative of each.

Forecasting an item based on its pattern

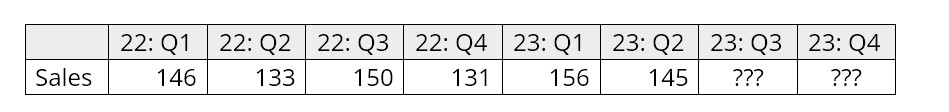

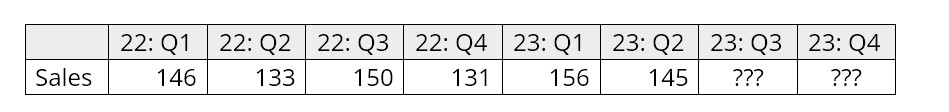

Given the following six quarterly sales figures, what sales can you expect for the third and fourth quarters of 2023?

Sales by Quarter

SmartForecasts gives you many ways to approach this problem. You can make your own statistical forecasts using any of six different exponential smoothing and moving average methods. Or, like most nontechnical forecasters, you can use the time-saving Automatic command, which has been programmed to automatically select and use the most accurate method for your data. Finally, to incorporate your business judgment into the forecasting process, you can graphically adjust any statistical forecast result using SmartForecasts’ “eyeball” adjustment capabilities.

Forecasting an item based on its relationship to other variables.

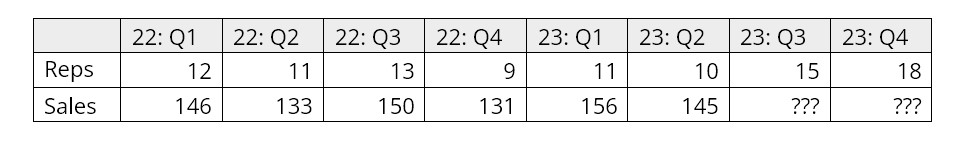

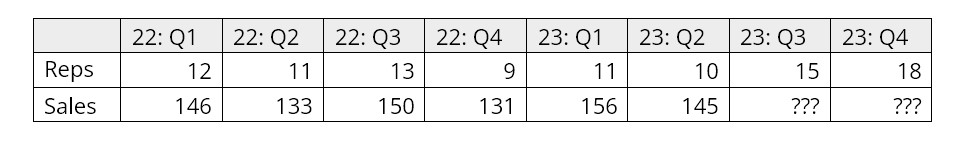

Given the following historical relationship between unit sales and the number of sales representatives, what sales levels can you expect when the planned increase in sales staff takes place over the final two quarters of 2023?

Sales and Sales Representatives by Quarter

You can answer a question like this using SmartForecasts’ powerful Regression command, designed specifically to facilitate forecasting applications that require regression analysis solutions. Regression models with an essentially unlimited number of independent/predictor variables are possible, although most useful regression models use only a handful of predictors.

Simultaneously forecasting a number of product items and their total

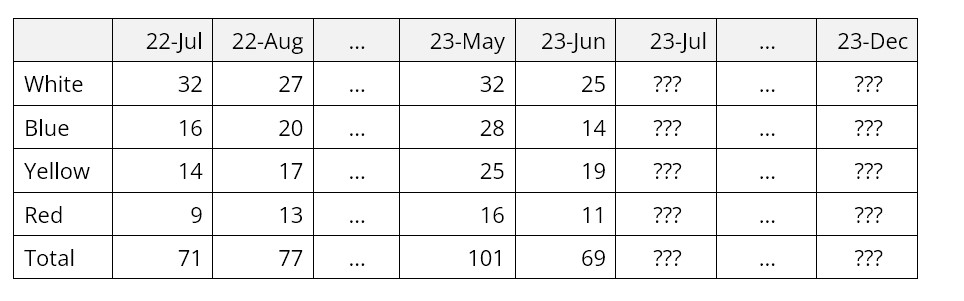

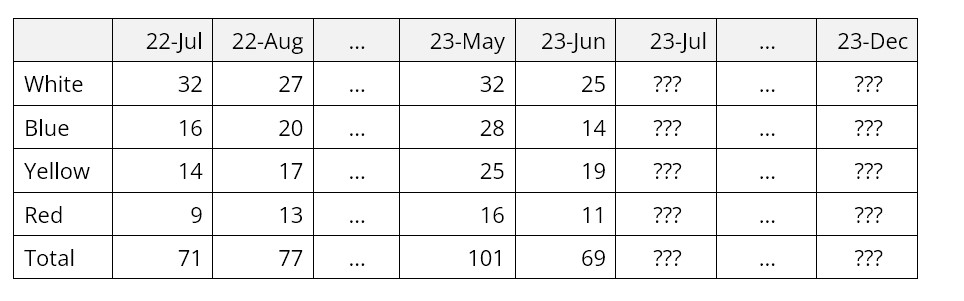

Given the following total sales for all dress shirts and the distribution of sales by color, what will individual and total sales be over the next six months?

Monthly Dress Shirt Sales by Color

SmartForecasts’ unique Group Forecasting features automatically and simultaneously forecasts closely related time series, such as these items in the same product group. This saves considerable time and provides forecast results not only for the individual items but also for their total. “Eyeball” adjustments at both the item and group levels are easy to make. You can quickly create forecasts for product groups with hundreds or even thousands of items.

Forecasting thousands of items automatically

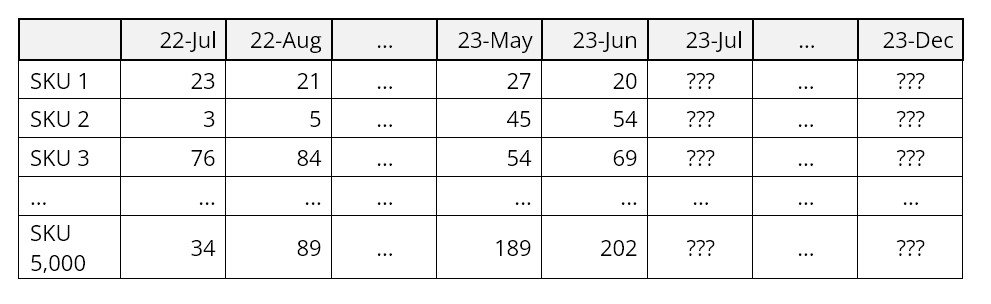

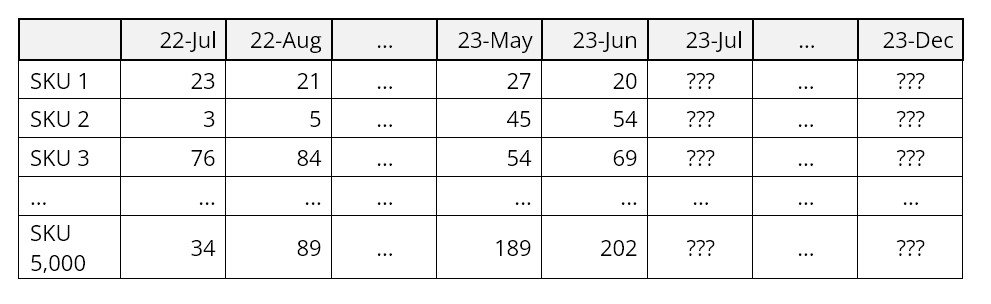

Given the following record of product demand at the SKU level, what can you expect demand to be over the next six months for each of the 5,000 SKUs?

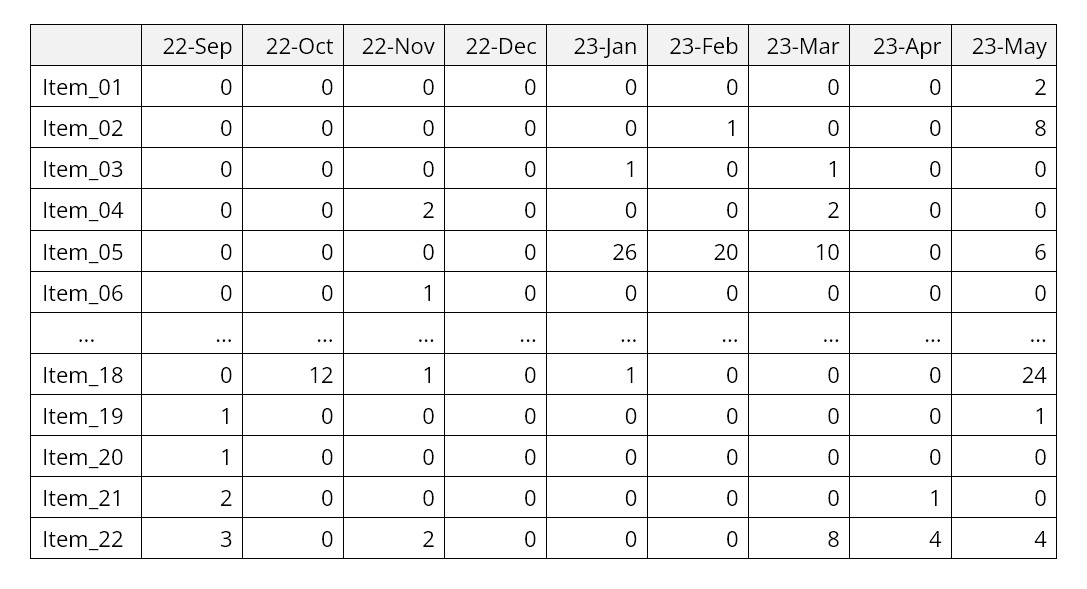

Monthly Product Demand by SKU (Stock Keeping Unit)

In just a few minutes, SmartForecasts’ powerful Automatic Selection can take a forecasting job of this size, read the product demand data, automatically create statistical forecasts for each SKU, and saves the result. The results are then ready for export to your ERP system leveraging any one of our API-based connectors or via file export. Once set up, forecasts will automatically be produced each planning cycle without intervention by the user.

Forecasting demand that is most often zero

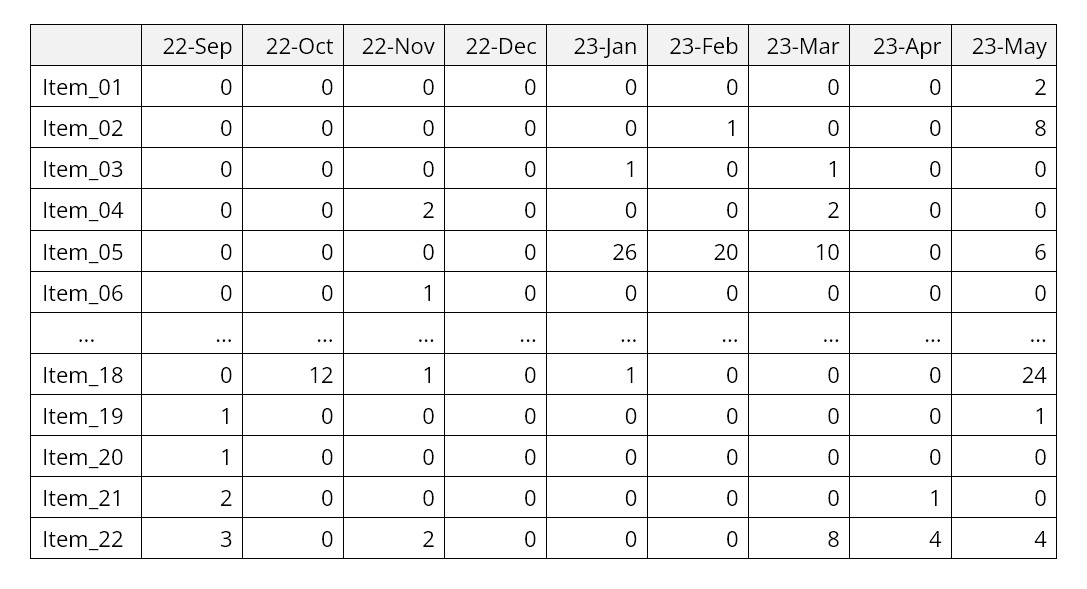

A distinct and especially challenging type of data to forecast is intermittent demand, which is most often zero but jumps up to random nonzero values at random times. This pattern is typical of demand for slow moving items, such as service parts or big ticket capital goods.

For example, consider the following sample of demand for aircraft service parts. Note the preponderance of zero values with nonzero values mixed in, often in bursts.

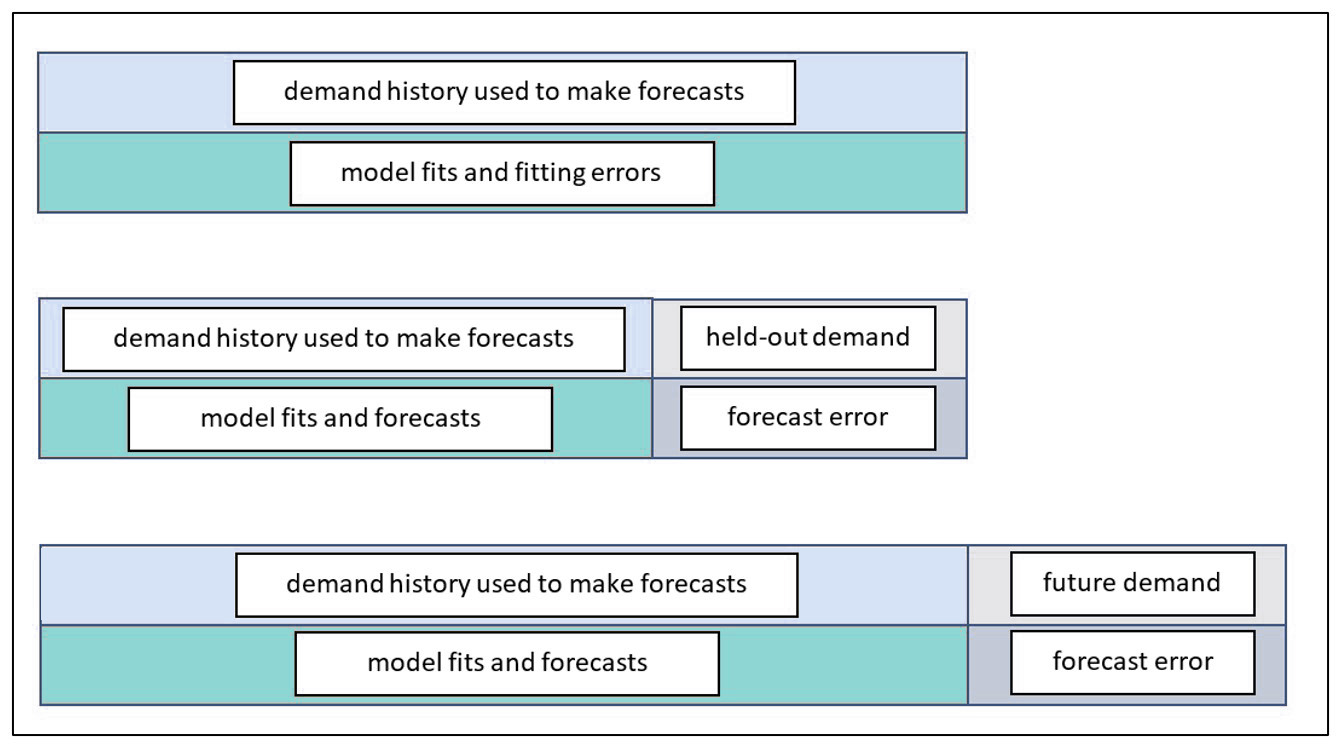

SmartForecasts has a unique method designed especially for this type of data: the Intermittent Demand forecasting feature. Since intermittent demand arises most often in the context of inventory control, this feature focuses on forecasting the range of likely values for the total demand over a lead time, e.g., cumulative demand over the period Jun-23 to Aug-23 in the example above.

Forecasting inventory requirements

Forecasting inventory requirements is a specialized variant of forecasting that focuses on the high end of the range of possible future values.

For simplicity, consider the problem of forecasting inventory requirements for just one period ahead, say one day ahead. Usually, the forecasting job is to estimate the most likely or average level of product demand. However, if available inventory equals the average demand, there is about a 50% chance that demand will exceed inventory, resulting in lost sales and/or lost good will. Setting the inventory level at, say, ten times the average demand will probably eliminate the problem of stockouts, but will just as surely result in bloated inventory costs.

The trick of inventory optimization is to find a satisfactory balance between having enough inventory to meet most demand without tying up too many resources in the process. Usually, the solution is a blend of business judgment and statistics. The judgmental part is to define an acceptable inventory service level, such as meeting 95% of demand immediately from stock. The statistical part is to estimate the 95th percentile of demand.

When not dealing with intermittent demand, SmartForecasts estimates the required inventory level by assuming a bell-shaped (Normal) curve of demand, estimating both the middle and the width of the bell curve, then using a standard statistical formula to estimate the desired percentile. The difference between the desired inventory level and the average level of demand is called the safety stock because it protects against the possibility of stockouts.

When dealing with intermittent demand, the bell-shaped curve is a poor approximation to the statistical distribution of demand. In this special case, SmartForecasts uses patented intermittent demand forecasting technology to estimate the required inventory service level.