In my previous post in this series on essential concepts, “What is ‘A Good Forecast’”, I discussed the basic effort to discover the most likely future in a demand planning scenario. I defined a good forecast as one that is unbiased and as accurate as possible. But I also cautioned that, depending on the stability or volatility of the data we have to work with, there may still be some inaccuracy in even a good forecast. The key is to have an understanding of how much.

This topic, managing uncertainty, is the subject of post by my colleague Tom Willemain, “The Average is not the Answer”. His post lays out the theory for responsibly confronting the limits of our predictive ability. It’s important to understand how this actually works.

As I briefly touched on at the end of my previous post, our approach begins with something called a “sliding simulation”. We estimate how accurately we are predicting the future by using our forecasting techniques on an older portion of history, excluding the most recent data. We can then compare what we would have predicted for the recent past with our actual real world information about what happened. This is a reliable method to estimate how closely we are predicting future demand.

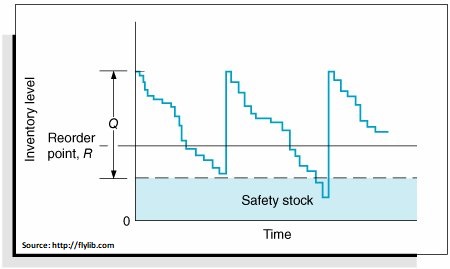

Safety stock, a carefully measured buffer in inventory level we stock above our prediction of most likely demand, is derived from the estimate of forecast error coming out of the “sliding simulation”. This approach to dealing with the accuracy of our forecasts efficiently balances between ignoring the threat of the unpredictable and costly overcompensation.

In more technical detail: the forecasts errors that are estimated by this sliding simulation process indicate the level of uncertainty. We use these errors to estimate the standard deviation of the forecasts. Now, with regular demand, we can assume the forecasts (which are estimates of future behavior) are best represented by a bell-shaped probability distribution—what statisticians call the “normal distribution”. The center of that distribution is our point forecast. The width of that distribution is the standard deviation of the “sliding simulation” forecast from the known actual values—we obtain this directly from our forecast error estimates.

In more technical detail: the forecasts errors that are estimated by this sliding simulation process indicate the level of uncertainty. We use these errors to estimate the standard deviation of the forecasts. Now, with regular demand, we can assume the forecasts (which are estimates of future behavior) are best represented by a bell-shaped probability distribution—what statisticians call the “normal distribution”. The center of that distribution is our point forecast. The width of that distribution is the standard deviation of the “sliding simulation” forecast from the known actual values—we obtain this directly from our forecast error estimates.

Once we know the specific bell shaped curve associated with the forecast, we can easily estimate the safety stock buffer that is needed. The only input from us is the “service level” that is desired, and the safety stock at that service level can be ascertained. (The service level is essentially a measure of how confident we need to be in our inventory stocking levels, with increasing confidence requiring corresponding expenditures on extra inventory.) Notice, we are assuming that the correct distribution to use is the normal distribution. This is correct for most demand series where you have regular demand per period. It fails when demand is sporadic or intermittent.

In the next piece in this series, I’ll discuss how Smart Forecasts deals with estimating safety stock in those cases of intermittent demand, when the assumption of normality is incorrect.

Nelson Hartunian, PhD, co-founded Smart Software, formerly served as President, and currently oversees it as Chairman of the Board. He has, at various times, headed software development, sales and customer service.

Related Posts

Confused about AI and Machine Learning?

Are you confused about what is AI and what is machine learning? Are you unsure why knowing more will help you with your job in inventory planning? Don’t despair. You’ll be ok, and we’ll show you how some of whatever-it-is can be useful.

How to Forecast Inventory Requirements

Forecasting inventory requirements is a specialized variant of forecasting that focuses on the high end of the range of possible future demand. Traditional methods often rely on bell-shaped demand curves, but this isn’t always accurate. In this article, we delve into the complexities of this practice, especially when dealing with intermittent demand.

Six Demand Planning Best Practices You Should Think Twice About

Every field, including forecasting, accumulates folk wisdom that eventually starts masquerading as “best practices.” These best practices are often wise, at least in part, but they often lack context and may not be appropriate for certain customers, industries, or business situations. There is often a catch, a “Yes, but”. This note is about six usually true forecasting precepts that nevertheless do have their caveats.