We work with many customers in many industries to connect our advanced analytical, forecasting, and inventory planning software to their ERP systems. Despite the variety of situations we encounter, some data-related problems tend to crop up over and over. This blog lists ten tips that can help you avoid these common problems.

Once a customer is ready to implement software for demand planning and/or inventory optimization, they need to connect the analytics software to their corporate data stream. In our case, we mainline transaction data directly into the analytical software. This provides information on item demand and supplier lead times, among other things. We extract the rest of the data from the ERP system itself, which provides metadata such as each item’s location, unit cost, and product group.

These tips are important because it is not uncommon for implementation projects to start with great enthusiasm but then quickly bog down because of problems with the data that fuel for analytics. These delays can reduce team enthusiasm, embarrass project leaders, and delay (and thereby reduce) the ROI payoff that ultimately justified the implementation project in the first place.

The importance of connecting the analytics software to the corporate data stream

Here is the list of tips, grouped by the general themes of handling files safely, insuring data integrity, and dealing with exceptions.

Handling Files Safely

- Have a test environment to use as a “sandbox.” Copy your current data to a test environment where you can safely experiment with the software without risking current operations. Besides helping users learn the ins-and-outs of the new software, having the latest data in the software allows end users to discover any problems with the data.

- Protect your data extraction rules. If you aren’t utilizing a pre-built connector to your ERP system then you to need to ensure that you can create savable extract rules to move data from your ERP to a file. Column orders, data types, date formats, etc. should not vary each time the same extract is re-executed. Otherwise the project gets bogged down in manual errors or confusion in re-extracts after fixes to the data or when new data roll in. All data extraction rules should be saved and available to IT – we’ve encountered situations where files extracted were done so in ad hoc manner resulting in a slightly different formats with each new extract. We’ve also seen customers work hard to develop a complex and accurate data extraction routine only to find all their work was lost when it was not properly archived. Both situations led to confusion and project delays.

- Don’t use Excel native file formats for data transfers. If your planning solution doesn’t have a direct integration to your ERP system, then export ERP data to a flat file format, such as comma delimited (.csv) or tab delimited text files. Don’t use MS Excel formats such as .xls or .xlsx as the export file type because Excel auto-reformats field values in unexpected ways. Many users assume they need to use .xlsx files if they want to manually review them, not realizing that .csv or .txt files can be opened just as easily and don’t carry the risk of auto-reformats.

Insuring Data Integrity

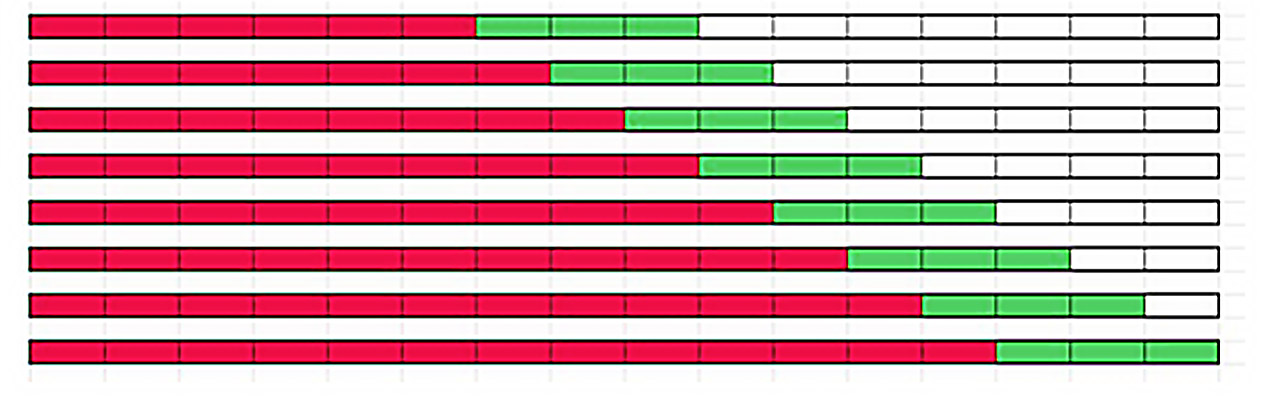

Data Problems and solutions in Software Implementation. Here is the list of tips, grouped by the general themes of handling files safely, insuring data integrity, and dealing with exceptions.

- Confirm the accuracy of your catalog data. Export your catalog data (i.e., list of products, list of customers, list of suppliers) and all their relevant attributes. Check for wrong or suspicious values in the attributes (especially item lead times and costs). Problematic values include blanks, zeros when you don’t expect zero as a data value, and text strings when you expect numeric values (or vice versa). It can help to open each extract file in Excel and filter on each attribute field, looking at the unique values to see what jumps out as not like the others (e.g., “1”, “2”, “&&”, “3”…).

- Confirm the accuracy of your grouping data. Another useful activity that can be done while viewing the product catalog data in Excel is to check major grouping/filtering fields like product family, category or class to make sure no products are assigned to the wrong category, class, or family. Likewise check any product status/product lifecycle fields, e.g., make sure that you have correctly identified all discontinued products.

- Check for spurious control characters within text fields. Check that there are no unusual characters extracted in your product descriptions, such as carriage returns or tabs within the description value itself. If so, make sure you can extract that data using double quote enclosures around the description or else fix data entry errors in the ERP system directly.

- Verify that data have a standard layout. Check that your extracts of transactional data (e.g., customer orders, customer shipments, purchase orders, supplier receipts) contain no duplicate rows. If they do, either identify what fields need to be added to make the rows distinct or, if they are truly duplicates, remove the extra copies in the ERP database.

Dealing with Exceptions

- Detect and react to exceptions. Identify any attributes of transactional data that would mean they should not be used, such as cancelled orders. Understand the process around mistakenly entered orders or cancelled orders to ensure against counting, or double counting, these types of transactions. Watch for other data attributes that would imply that attribute should not be used, such as drop shipping to the customer directly from a supplier rather than shipping it from your own company.

- Codify the handling of exceptional internal transfers. Define the idealized record of emergency internal stock transfers and then provide rules to edit any transactions done on an emergency basis that vary from the ideal pattern. For example, if product P1 is supposed to be shipped out of location A, but there was an emergency shipment out of location B, the demand history for P1 at location A is hijacked and less than it should have been. If possible, provide a rule on the preferred shipping location for each product so that the history can be corrected by the inventory optimization software for forecasting purposes.

- Devise a procedure to handle supersession. Supersessions arise, for instance, when adopting a new ERP which re-indexes the products, or an old product is replaced by an updated version, or an entirely new product obsoletes and old one. If product identifiers changed within the past few years for any reason, identify a mapping from the old product ID to the new. These rules should be available to the demand planning and forecasting system and editable within the application.

Failure to anticipate data problems is a major impediment to smooth implementation of new analytical software. No list can enumerate all the odd things that can go wrong in curating data, but this one highlights common problems and sensible responses.

Note: For more on how data problems can stymie the application of advanced analytical software, see Sean Snapp’s excellent blog on how this issue is obstructing the application of artificial intelligence and machine learning. https://www.brightworkresearch.com/demandplanning/2019/05/how-many-ai-projects-will-fail-due-to-a-lack-of-data/