How long should it take for a demand forecast to be computed using statistical methods? This question is often asked by customers and prospects. The answer truly depends. Forecast results for a single item can be computed in the blink of an eye, in as little as a few hundredths of a second, but sometimes they may require as much as five seconds. To understand the differences, it’s important to understand that there is more involved than grinding through the forecast arithmetic itself. Here are six factors that influence the speed of your forecast engine.

1) Forecasting method. Traditional time-series extrapolative techniques (such as exponential smoothing and moving average methods), when cleverly coded, are lighting fast. For example, the Smart Forecast automatic forecasting engine that leverages these techniques and powers our demand planning and inventory optimization software can crank out statistical forecasts on 1,000 items in 1 second! Extrapolative methods produce an expected forecast and a summary measure of forecast uncertainty. However, more complex models in our platform that generate probabilistic demand scenarios take much longer given the same computing resources. This is partly because they create a much larger volume of output, usually thousands of plausible future demand sequences. More time, yes, but not time wasted, since these results are much more complete and form the basis for downstream optimization of inventory control parameters.

2) Computing resources. The more resources you throw at the computation, the faster it will be. However, resources cost money and it may not be economical to invest in these resources. For example, to make certain types of machine learning-based forecasts work, the system will need to multi-thread computations across multiple servers to deliver results quickly. So, make sure you understand the assumed compute resources and associated costs. Our computations happen on the Amazon Web Services cloud, so it is possible to pay for a great deal of parallel computation if desired.

3) Number of time-series. Do you have to forecast only a few hundred items in a single location or many thousands of items across dozens of locations? The greater the number of SKU x Location combinations, the greater the time required. However, it is possible to trim the time to get demand forecasts by better demand classification. For example, it is not important to forecast every single SKU x Location combination. Modern Demand Planning Software can first subset the data based on volume/frequency classifications before running the forecast engine. We’ve observed situations where over one million SKU x Location combinations existed, but only ten percent had demand in the preceding twelve months.

4) Historical Bucketing. Are you forecasting using daily, weekly, or monthly time buckets? The more granular the bucketing, the more time it is going to take to compute statistical forecasts. Many companies will wonder, “Why would anyone want to forecast on a daily basis?” However, state-of-the-art demand forecasting software can leverage daily data to detect simultaneous day-of-week and week-of-month patterns that would otherwise be obscured with traditional monthly demand buckets. And the speed of business continues to accelerate, threatening the competitive viability of the traditional monthly planning tempo.

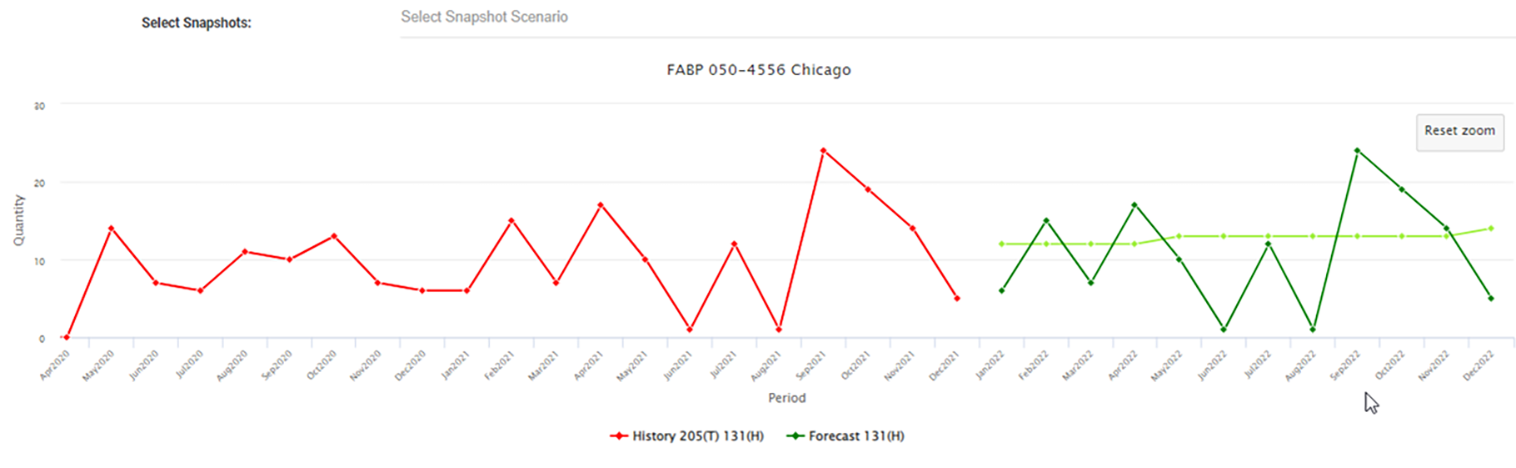

5) Amount of History. Are you limiting the model by only feeding it the most recent demand history, or are you feeding all available history to the demand forecasting software? The more history you feed the model, the more data must be analyzed and the longer it is going to take.

6) Additional analytical processing. So far, we’ve imagined feeding items’ demand history in and getting forecasts out. But the process can also involve additional analytical steps that can improve results. Examples include:

a) Outlier detection and removal to minimize the distortion caused by one-off events like storm damage.

b) Machine learning that decides how much history should be used for each item by detecting regime change.

c) Causal modeling that identifies how changes in demand drivers (such as price, interest rate, customer sentiment, etc.) impact future demand.

d) Exception reporting that uses data analytics to identify unusual situations that merit further management review.

The Rest of the Story. It’s also critical to understand that the time to get an answer involves more than the speed of forecasting computations per se. Data must be loaded into memory before computing can begin. Once the forecasts are computed, your browser must load the results so that they may be rendered on screen for you to interact with. If you re-forecast a product, you may choose to save the results. If you are working with product hierarchies (aggregating item forecasts up to product families, families up to product lines, etc.), the new forecast is going to impact the hierarchy, and everything must be reconciled. All of this takes time.

Fast Enough for You? When you are evaluating software to see whether your need for speed will be satisfied, all of this can be tested as part of a proof of concept or trial offered by demand planning software solution providers. Test it out, and make sure that the compute, load, and save times are acceptable given the volume of data and forecasting methods you want to use to support your process.